In the previous post, we discussed how we are getting increasingly good at simulating complex systems and their interactions with the environment. This helps us answer the question, “what is most likely to work?”

Ability to simulate complex systems has given us the ability to screen through thousands of possibilities before committing to a prototype. We don’t have to make and test every one of our hypotheses. We don’t even have to “guess” what is likely to work. We can be sure that given a set of assumptions, this is a design that should work the best under a set of operating parameters.

Once the design of this prototype is finalised, we reach the next bottleneck: how close to our modelled specifications will our prototype actually be?

Our inability to manufacture our prototypes to the needed specifications was a major roadblock in the way of hardtech development. The nature of hardtech by itself demands precision in manufacturing on all scales.

The outsized impact of manufacturing on success: a case study

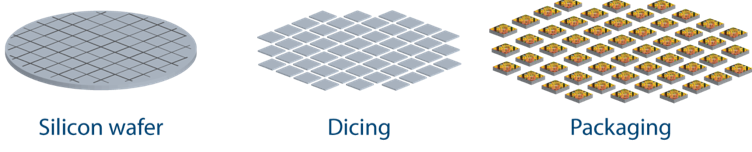

In 1980, Gene Amdahl, widely known as a pioneer of mainframe computer architecture for his work at IBM and later at his own Amdahl Corp, started his newest venture, Trilogy Systems. The goal was to build the world’s largest computing chip. At that time, the state-of-the-art manufacturing processes could only fabricate chips which were 0.25 inch on one side. Traditionally, computer chips have been built by etching identical circuits onto silicon wafers. The blocks are then cut into thumbnail- sized squares, or chips.

Eventually, enough different chips are created so that they can be linked on a printed circuit board to form part of a computer. Mainframe computers at the time had 100s of such chips. All these chips needed to communicate with each other for the end-user to see functional increase in computational power. When 100s of chips have to communicate for every operation, it affects performance. Moreover, every operation becomes power-hungry resulting in excessive electrical power consumption.

Trilogy wanted to build chips which would be 2.5 inch on one side (10X of SOTA) that would be connected to the system with 1200 pins (30X of SOTA). It was the first audacious attempt at wafer-scale integration — fabricating multiple chips onto the same wafer and thereby, keeping all communication on chip. Current would flow over far shorter distances on Trilogy’s big chips and fewer chips would be needed — thus vastly accelerating the speed of data processing. This architecture would lead to higher performance and lower power consumption, a holy grail for computer architects.

Trilogy was fully aware they would face problems because of limitations in semiconductor photolithography processes. Functionally, this meant the following: if Trilogy started out wanting to have 100 chips on a single 2.5 inch wafer, they would actually end up with much less than 100. Why? Because semiconductor processing technologies are not perfect (in fact, they were quite bad in 1980s) and it was extremely common to have faulty chips due to errors in the manufacturing processes. Trilogy knew this and made two design choices.

They chose to build in redundancy: all chips were replicated on the wafer thrice, such that if any were lost to fabrication error, there were two more which could be used.

They didn’t use the smallest possible transistor node: Trilogy knew that using the smallest possible node available would lead to even more non functional chips. Instead they chose to stay at the more robust ~3 micron node (instead of the 2.5 micron, which was available).

Trilogy was helmed by, possibly, the best brains to solve this problem. They made the most pragmatic design choices. They raised $ 276M to bring this audacious goal to fruition. How do you think this turned out?

Not well.

Apart from a host of non-technological problems, Trilogy capitulated to three major issues:

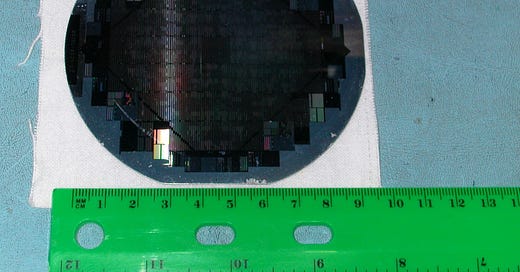

Semiconductor processing technologies were prone to manufacturing errors. A metric of relevance here is defects per square centimetre: how many random defects are introduced per unit area by the manufacturing process itself. Obviously, you want it to be as low as possible. In 1980s, for these processes, this number was 2. It means that for a 150mm diameter wafer, we would introduce about 350 defects. There is nuance in how these defects translate into loss of yield. See the figure below.

In the early 1980s, on average, the yield was about 25%. This means, crudely, for every 1 chip that works, you have 3 that don’t. Now if you were going to cut up the wafer into individual chips and use them separately, you could just discard the chips that were faulty. But Trilogy, couldn’t do this. Remember, it is all one big wafer. If a chip failed, they would make use of the redundant replicated chips on the wafer. But this is a tradeoff — below a certain yield, you will be left with a very low number of usable chips per wafer. Trilogy couldn’t get past this limitation. They produced a lot of faulty chips and then had to charge a huge price for the ones that turned out well, in turn reducing the appeal to customers.

2. When you bring 100 chips which were all supposed to be further apart very close together, you have very less space to lay out the wiring to connect them for communication. More importantly, the engineering tolerance on these processes drops significantly because you have now increased the chances of an electrical short by messing up a connection which you probably wouldn’t have if the chips were further apart. This is a double whammy. Remember, there are 100 chips on a wafer. Not only does your new process need to be more precise on smaller dimensions but you also run the risk of burning up all 100 chips if you mess up one connection — it’s all one big wafer!

3. In order to take advantage of the redundancy in the architecture, Trilogy had to lay out lots of microscopic wires in extremely small areas. The more wires you need to lay down, the less area you have left for the chips themselves. Trilogy then decided to move the chips further apart to improve the precision with which they could connect them. This is also a double whammy. When you move the chips further apart, you lose processing speed as data has to travel longer routes. Additionally, when you place chips farther away from each other, you can place lesser number of chips in total on a given surface area. As a result, you lose more computational power.

Ultimately and in summary, the manufacturing precision of the era proved too low for Trilogy to accomplish what they wanted to.

How does this relate to our discussion?

In 2016, Cerebras, took up the same challenge. Funded by Benchmark to the tune of $ 112M, they set out to build the world’s largest chip. After 3 years of work, Cerebras unveiled WSE (Wafer Scale Engine): a single chip that contains more than 1.2 trillion transistors and is 46,225 square millimetres in size. This translates to a gargantuan 400,000 cores on a wafer.

What is different this time?

Over the 40 years between Cerebras and Trilogy, we have become extremely good at precision engineering. The metric we talked about, defects/cm², is now 0.09 as reported by Cerebras’ manufacturing partner TSMC. This is only going to improve. Yield (which used to be ~20s %) is now routinely greater than 90%. Remember how Trilogy had to triplicate every core because of low yields? Cerebras sets aside only 1–1.5% of the 400,000 cores for redundancy: yes, we are that good now. While Trilogy was built on the 3 micron node, Cerebras is built on the 16 nm node — almost 200X smaller. Those interconnects, which were such a problem for Trilogy? We have become proficient at handling much smaller interconnects (more than 100X smaller) and Cerebras has designed completely new strategies of approaching the issue.

A rising tide lifts all boats: advanced manufacturing is touching every domain

The advances in precision engineering is not limited to the semiconductor industry. The semiconductor industry simply happens to be its most visible benefactor. One other non-intuitive example is that of fusion reactors. In this domain, the goal is to direct several high powered laser beams into extremely small areas to cause the nuclear reaction to begin. We need large mirrors with extremely low surface roughness ~0.5 nanometre to steer laser beams precisely. If the surface of the mirror has any impurities or defects, the high energy laser beam will shatter it instantly. There is practically no engineering tolerance for this application. The standards are exacting. Yet, we are getting there.

The Moore’s law equivalent for manufacturing is called the Taniguchi curve. Look at the line trace for ultraprecision machining and note how we are beginning to reach the numbers that are going to be required for hardtech development.

Advances in precision manufacturing are also well afoot in the life sciences domain.

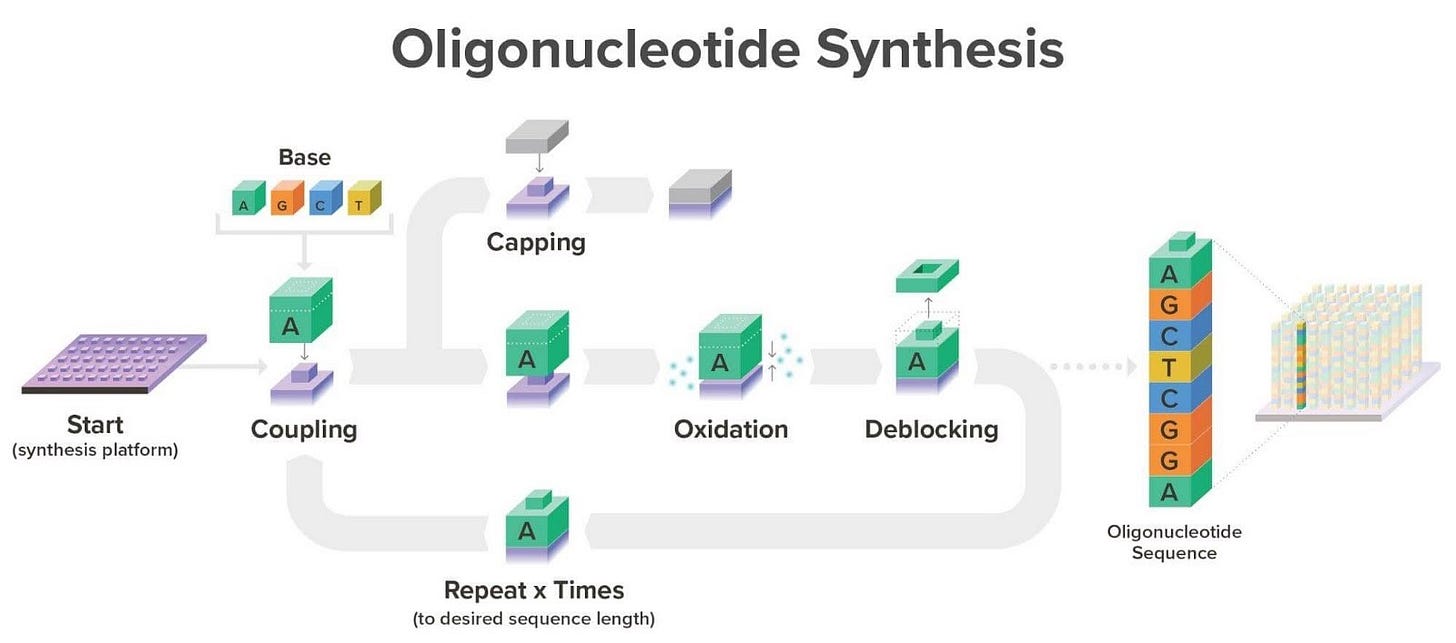

DNA synthesis is, perhaps, the most commonly used manufacturing — key to all kinds of biomanufacturing as such. DNA is composed of 4 nucleotides A, T, G, C and manufacturing strands of DNA involves stringing these one after the other in the desired sequence. The workhorse technology for synthesizing custom DNA strands, critical for all synthetic biology applications, is called phosphoramidite chemistry.

Until 2010, DNA synthesis by this technology was too unproductive. Users made far too much DNA per run than needed, far too slowly and at far higher costs than was sustainable for a high throughput design-build-test cycle. As a result, users could only test a few designs before having to abandon ideas.

Twist Biosciences changed the paradigm by bringing semiconductor fabrication inspired miniaturisation to chemical DNA synthesis. It became possible to generate 10000 strands of DNA on a single reaction platform.

By shrinking reaction volumes down by 1,000,000X and increasing throughputs by 1000X, Twist pioneered a new era in high-throughput, high-fidelity, low-cost DNA synthesis. What’s more, you could just order your DNA sequence online and it would be shipped to you, ready to use.

What about precision, though?

Applications for which you need to synthesise DNA have very little tolerance for error. In fact, a single error (if it occurs in particular positions) on the string can lead to a non functional variant of the protein you are trying to manufacture using this DNA sequence.

Phosphoramidite technology has inherent limitations that prevent it from improving much further. The synthesis error rate is high, as much as 0.2%, which limits the practically attainable length: once you reach a certain length, any individual oligo is virtually guaranteed to contain an error. This range is typically 100–200 nucleotides. Newer applications (synthetic biology, gene editing, DNA therapeutics, and DNA nanotechnology) require much longer sequences. We address the current limitations on strand length by adopting workarounds — synthesise shorter fragments (while maintaining accuracy), stitch them together to form a longer strand, fill in the complementary strand, and then performing various kinds of error correction. This increases cost, decreases throughput and is especially difficult to use for assembly of sequences that contain repetitive regions.

What we need is low-cost, high-accuracy, high-throughput manufacturing of DNA which is independent of length of the strand.

Enter: enzymatic DNA synthesis

When eukaryotic cells replicate DNA, they can do with extremely high accuracy. The enzymes they employ make 1 error per 10⁷ bases or more. This is 10⁵ times better than our chemical DNA synthesis. Deservedly, enzymatic DNA synthesis is receiving a lot of attention and venture capital. At least 4 startups, with their proprietary enzymes (Ansa, Molecular Assemblies, Nuclera and DNA Script) are making rapid strides to make gene-length DNA synthesis a reality.

When their offerings hit the market, synthetic biologists’ efficiency per dollar will skyrocket. If biomanufacturing has already made it this far despite all the inherent limitations of something so critical to it as DNA synthesis, imagine where we can go when that bottleneck is released. Enzymatic DNA synthesis will not only make sure that our designs are manufactured with molecular precision, it will also allow us to test more designs and identify our highest performing ones with lower cost.

This has been a look at how advances in simulation and precision manufacturing are radically changing how we can conceive of and execute on bold ideas.

In the next part, we will discuss how the ecosystem of hardtech is evolving to tackle another challenge: high capex.